We've described the battle between VMware and Microsoft

as a hypervisor war going back as far as the first release of Microsoft Virtual

Server. Unfortunately for Microsoft, that war was pretty ugly. On the one

side, VMware had guns and

cannons, and on the other side, Microsoft was throwing rocks. Ok,

maybe it wasn't quite that bad, but you get the picture.

Fast forward and Microsoft has added a lot of weaponry

with the introduction of Microsoft Hyper-V, the company's true hypervisor. And

the Redmond giant's offering just keeps on getting better. But, so too does the

ESX hypervisor from VMware. A virtual tug-of-war if you

will.

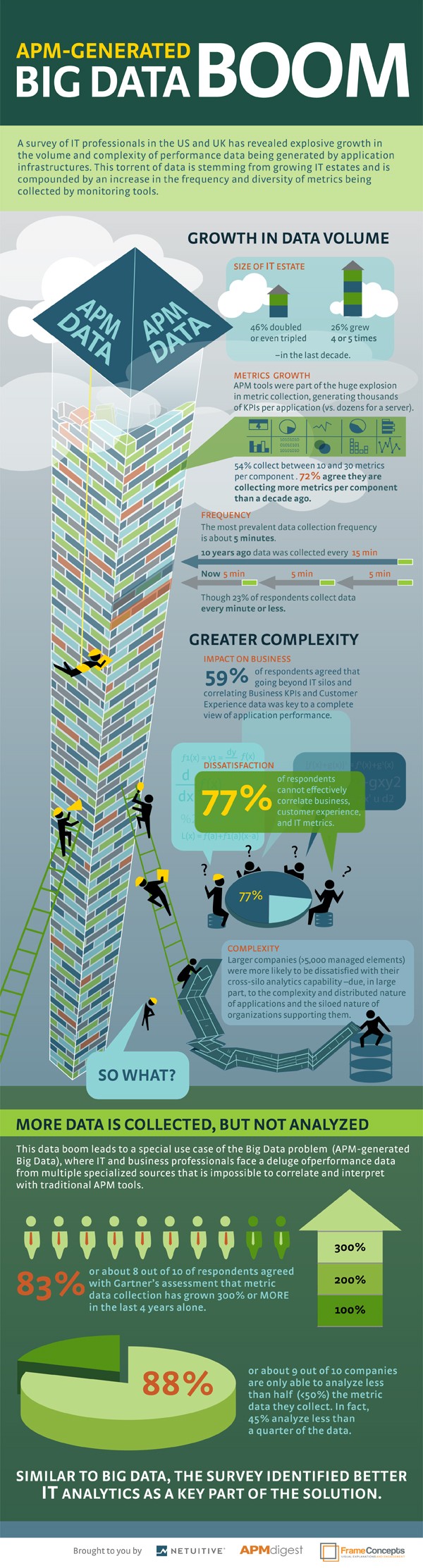

Check out this latest Infographic coming from the folks

at SolarWinds.

Instead of

depicting this as a hypervisor war, the InfoGraphic calls it the Hypervisor

Tug-of-War.

The survey information clearly shows that VMware

remains in control. However, there are some interesting data points in

there as well.

Survey responders believe that VMware is far and away

going to be the most trusted private cloud vendor, with a whopping

76.9%

However 76.1% believe Microsoft will close the

functionality gap with VMware thanks to Hyper-V 3.0

While trying to break beyond the barrier of VM Stall,

it sounds like 56.8% of key applications will be deployed on a private cloud

next year, while 57.7% have already moved beyond the 40% mark of their

datacenter being virtualized

70% of end users still deploy more than one

virtualization tool

And at the bottom, we see that VMware still commands a

lead in the hypervisor tug-of-war.

![Rackspace® — [INFOGRAPHIC] How Cloud Computing is Saving the Earth](http://c179631.r31.cf0.rackcdn.com/Rackspace_Earth%20Day_infographic_Final_04202012.jpg)

![Rackspace® — API Adoption And The Open Cloud: What Is An API? [Infographic]](http://c3414940.r40.cf0.rackcdn.com/blog/wp-content/uploads/2012/07/API_Infographic_Rackspace_July2012.png)

![Rackspace® — [INFOGRAPHIC] Hosting to Storage: Why the Cloud is a Big Deal for Small Businesses](http://c179631.r31.cf0.rackcdn.com/Small_Biz_in_the_Cloud_Infographic.png)

![Rackspace® — [Infographic] Healthcare in the Cloud](http://ddf912383141a8d7bbe4-e053e711fc85de3290f121ef0f0e3a1f.r87.cf1.rackcdn.com/Rackspace_Healthcare_CloudComputing.jpg)